At Sensr we recently had the opportunity to serve as an implementation partner on the DAP²CHEM project , an innovative project aiming to stimulate the transition of chemical and life science companies towards Industry 4.0. This project was a collaboration between top Flemish research institutes and top players in the chemical and life space (with a Flemish presence), Procter and Gamble, Ajinomoto Bio-Pharma Services and Janssen. Through this article we share our learnings about machine learning at the edge from that project.

Why ML at the Edge?

Most of our interactions with machine learning (ML) backed systems happen through communication over the internet. We send data to an ML model in the cloud for inferencing, wait a few (milli)seconds, and get a response with the results. At Sensr we often encounter Industrial Internet of Things (IIoT) systems where this pattern for ML inference can be prohibitive due to:

- The latency inherent to communication over the internet. The (milli)second delay that we mentioned might be enough for the window of opportunity where the output of the model is useful to pass. For example, a faulty item on a production line might be too far down the conveyor belt to be discarded.

- The absence of stable connectivity to the internet. This can be due to the environment the system operates in, or due to the nature of the device producing the data. ML-backed systems must be able to provide at least the same level of reliability as the systems they are replacing or improving upon in order to be viable. Hence, they must be able to properly function even when connectivity drops (un)expectedly.

- The high velocity and volume at which data is produced. In addition to amplifying the issues caused by the previous two points. Sending all of this data to the cloud for processing, whether in batch or real-time, would incur quite a cost. This is especially the case when the number of edge devices that produce data grow into the thousands.

- Although less relevant for industrial applications of IoT, laws and regulations may be in place for sensitive data, in order to protect the privacy of individuals. In this case, sending certain information to the cloud might be prohibited, or the risk of interception and leakage may outweigh the benefit of processing the data in the cloud.

By deploying ML models closer to the source of the data they are processing we can eliminate the need for internet communication in our flow. This increases the viability of some interesting industrial use cases of ML including:

- The integration of computer vision models in visual inspection, security, and safety systems, where inclusion of ML into the loop can only be beneficial if the rest of the system can react to the output of the models in real time.

- Performing predictive maintenance using data collected by audio and vibration sensors attached to industrial machinery. When the data collected by a sensor indicates anomalous behavior, real-time contingency measures can be taken to prevent damage to the machine or the environment it operates in.

Challenges and Considerations

Most of our interactions with machine learning (ML) backed systems happen through communication over the internet. We send data to an ML model in the cloud for inferencing, wait a few (milli)seconds, and get a response with the results. At Sensr we often encounter Industrial Internet of Things (IIoT) systems where this pattern for ML inference can be prohibitive due to:

- The latency inherent to communication over the internet. The (milli)second delay that we mentioned might be enough for the window of opportunity where the output of the model is useful to pass. For example, a faulty item on a production line might be too far down the conveyor belt to be discarded.

- The absence of stable connectivity to the internet. This can be due to the environment the system operates in, or due to the nature of the device producing the data. ML-backed systems must be able to provide at least the same level of reliability as the systems they are replacing or improving upon in order to be viable. Hence, they must be able to properly function even when connectivity drops (un)expectedly.

- The high velocity and volume at which data is produced. In addition to amplifying the issues caused by the previous two points. Sending all of this data to the cloud for processing, whether in batch or real-time, would incur quite a cost. This is especially the case when the number of edge devices that produce data grow into the thousands.

- Although less relevant for industrial applications of IoT, laws and regulations may be in place for sensitive data, in order to protect the privacy of individuals. In this case, sending certain information to the cloud might be prohibited, or the risk of interception and leakage may outweigh the benefit of processing the data in the cloud.

By deploying ML models closer to the source of the data they are processing we can eliminate the need for internet communication in our flow. This increases the viability of some interesting industrial use cases of ML including:

- The integration of computer vision models in visual inspection, security, and safety systems, where inclusion of ML into the loop can only be beneficial if the rest of the system can react to the output of the models in real time.

- Performing predictive maintenance using data collected by audio and vibration sensors attached to industrial machinery. When the data collected by a sensor indicates anomalous behavior, real-time contingency measures can be taken to prevent damage to the machine or the environment it operates in.

Deployment Pattern

We boiled down Amazon Web Services’ (AWS) and Microsoft Azure’s reference architectures for enabling ML inference at the edge to their essence; the pattern described by the following sequence diagram:

A central part of the flow is some entity that represents the desired state of the edge device. This entity is part of your “always available” IT infrastructure and can thus be reliably updated as part of a continuous deployment flow. The associated edge device periodically polls this entity for state changes and updates its own state when necessary. This approach solves the three deployment related challenges we listed earlier:

- The desired state entity can be updated programmatically, that is, very efficiently, for thousands of devices.

- Since the edge device initiates any communication it is considered to be outbound. Outbound communication is often less strictly policed by corporate firewalls than inbound communication, making it easier to comply with existing firewall rules.

- If the device is offline while the desired state is updated, the change will be picked up by the device once it is back online.

You could, in theory, roll your own implementation, but both AWS and Azure have a suite of IoT services that can help you quickly facilitate this pattern as we will show in the following section.

A running start with Azure IoT

The Azure IoT suite of services can give you a running start when trying to implement this pattern. Azure IoT Hub and Azure IoT Edge together provide a comprehensive solution for managing IoT devices and processing IoT data at scale:

- Azure IoT Hub is a cloud-based service that enables bidirectional communication between IoT devices and the cloud, allowing for device management, data ingestion, and monitoring at scale. Device and module twins in IoT Hub are used to maintain the desired state of the IoT devices and their associated modules.

- Azure IoT Edge extends the capabilities of Azure IoT Hub by providing a way to run cloud workloads locally on IoT Edge devices, enabling them to perform edge computing and analytics, even when the devices are offline or have limited connectivity. One of the responsibilities of the runtime that comes with IoT Edge is handling communication between the IoT Edge device and the cloud.

In what follows we assume that you already:

- Set up an IoT Hub. Be sure to choose a tier that supports Azure IoT Edge.

- Created and provisioned an IoT Edge device.

- Have a basic understanding of how to develop IoT Edge modules.

As an example we set up a free tier IoT Hub and provisioned an IoT Edge device that we set up in a local virtual machine. To serve as a starting point we have also created a custom Python IoT Edge module, called model_loader, based on the Python Cookiecutter template.

By setting up these Azure-provided building blocks we have already “implemented” three components crucial to our pattern; an edge device, a way to set its desired state via device and module twins in the cloud, and communication between the edge device and the cloud. As such most of the heavy lifting has been done for us by Microsoft. All that is left to do for us is some fine-tuning to enable the rest of the pattern.

Setting up artifact storage

IoT edge modules run in containerized environments. Hence when a module, or the device needs to restart any files that have been created in a module’s filesystem are lost, e.g., downloaded model artifacts. We can provide our custom module with access to persistent storage on the host file system by extending the module’s create options in the deployment manifest with a storage binding, where Source and Target contain your host and module storage path, respectively; both must be absolute paths. The deployment manifest for our model_loader module looks as follows:

Reacting to desired state changes

In Azure IoT Hub we can set the desired state for a device/module through the desired properties object in the device/module twin. For example, we set the following desired properties on the twin of our model_loader module to indicate which model we want the module to load, where to save it, and which checksum to use for validating the downloaded model:

For the purpose of this article we are using a publicly available model, but under normal circumstances you would host your model in a private repository.

The module template that we used to create our model_loader module does not implement any handling of changes in the desired state, so called twin patches. Further down we provide a basic implementation of an incoming twin patch handler, which is invoked when desired properties are updated on the module twin. Inside the handler we also set a reported property, to provide some form of feedback to IoT Hub.

The handler shown below performs the following steps when a twin patch is received:

- Check whether the twin patch is valid, that is, contains all expected keys.

- Extract the model’s download url, MD5 checksum, and local file name from the twin patch.

- Check whether the model already exists locally. If so, returns in order to prevent an unnecessary download.

- Downloads the model and validates the downloaded file using the MD5 checksum from the twin patch.

- Sets the reported property

lastModelUpdateto the current time to indicate when the model.

In our module’s main.py file we register the handler using the following code snippet:

Testing the setup

After implementing and deploying the handler, the logs of our model_loadermodule look as follows:

We can see that the twin patch handler was invoked twice. The first time, triggered by the module starting up, the handler finds an existing model file, which was downloaded during development of the module. The second invocation was triggered by editing modelFileName in the module twin through the Azure Portal UI. A file with that name does not yet exist and therefore a download is triggered.

If we then look in the edge device’s local folder that we set as mount source in the deployment manifest, we see both files mentioned in the logs. These won’t be lost if the device or the module’s container is restarted and can be accessed by other modules through the same mounting mechanism.

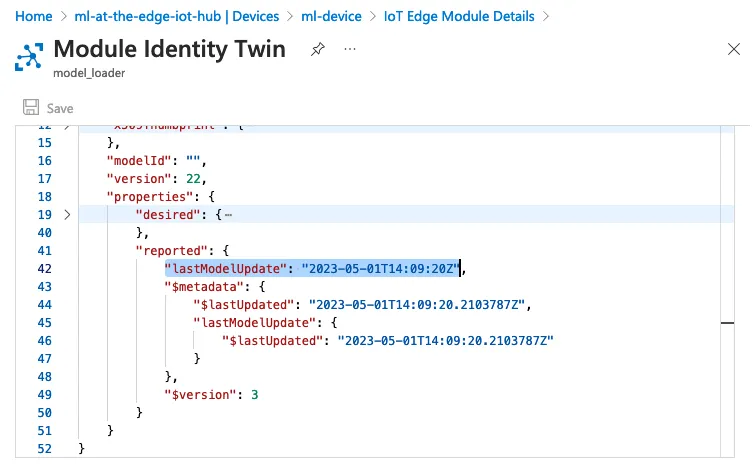

We can also verify that the model file was updated by looking at the reported properties in the module’s twin in Azure Portal. We see that the lastModelUpdate property has been set to the timestamp when our handler successfully downloaded the model file:

Conclusion

In this article, we cover the need for ML at the edge and associated deployment challenges. We also describe a generic pattern for tackling said challenges and provide an example implementation using the Azure IoT Edge framework. I hope that this article helped you in a meaningful way and that you had as much fun reading it as I had writing it.

Cheers,

— Andrei